The Rise–and Fall–of DevOps

A Session with Jon Hawk, Director of Cloud Engineering

This week, we sat down with Jonathan Hawk, Mindgrub’s Director of Cloud Engineering, as he discussed the rise and fall of DevOps.

Before we begin, what is DevOps?

GitLab states that DevOps can be best explained as “people working together to conceive, build and deliver secure software at top speed.”

But according to Hawk, DevOps might not be around for much longer.

DevOps is set to be outpaced and outdated within the next 5 to 10 years! However, the inception of DevOps and its best practices are just as interesting as its impending end. After all, we have to learn from the past in order to futureproof our next endeavors in tech.

Find out what’s happening with DevOps, learn about the history of evolving tech and how it plays into our modern-day computing standards–and discover a few fun facts about the origins of computers.

Watch or read the full transcript of his presentation below!

“So today's topics! We are going to define the term DevOps, identify its predecessors, describe the inception of DevOps, run through a few of the best practices that are detailed in DevOps, and discuss its impending end.

DevOps is probably not long for this world and you'll find out why. So let's start at the top. A few billion years ago, there was an explosion of some renowned. Okay. That's too far back. (Laughs) The last time I checked it is 2022.”

The Definition of DevOps

The term DevOps has been around for about 14 years. So let's look at how some companies that you may be familiar with define the term DevOps today:

First is AWS (Amazon Web Services) which says ‘DevOps is the combination of cultural philosophies, practices, and tools that increases an organization's ability to deliver applications and services at high velocity.’

If you notice, nowhere in there did they say anything about cloud infrastructure or source control, or continuous integration!

Microsoft says that ‘[DevOps] is the union of people, process, and technology to continually provide.’

GitLab says something similar: ‘DevOps can be best explained as people working together to conceive, build and deliver secure software at top speed.’

And finally, Atlassian says “DevOps is a set of practices, tools, and a cultural philosophy that automate and integrate the processes between software development and IT teams.”

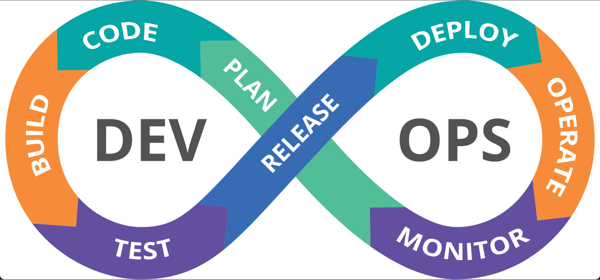

Clearly, it is a long storied career that DevOps, as a term, has had. How does that constant improvement that Atlassian mentioned happen? The answer is The Loop. Each iteration through the DevOps Loop is meant to refine and improve the system that is considered to be a part of the project where you were applying DevOps. The duration of an iteration through this Loop could be hours, days, or even weeks.

The DevOps Loop:

Assistant Secretary of Acquisitions

Let's walk through some of these examples: A new feature is requested. The specs are planned, implemented, compiled, tested, delivered, installed, ran, and watched. We noticed that some users' requests take 10 seconds, so the operations team opens a development ticket to reduce that time.

The improvement is planned, implemented, compiled, and so on.

The names of the steps within The Loop are certainly up for debate. But one thing is for certain–the result of each step is what we call an ‘artifact’. So you could imagine that the artifacts of planning are also requirements.

You get data or value. And in monitoring, you get metrics, and just like in continuous integration or continuous delivery process, each step produces an artifact that you then use within subsequent steps. So, DevOps itself is a method to deliver artifacts. It's a set of opinionated practices to do, so it doesn't work in a vacuum.

DevOps is very akin to Agile. It's very akin to extreme programming, like Sigma or Lean, or any other philosophy that you could come up with for getting a project done and it features every skill set in the company, from sales to testing and monitoring.

Everybody has a place in this process.

It just so happens that DevOps includes best practices for cloud computing because it emerged alongside cloud computing, but otherwise, they are not synonymous. If you want an example of another set of practices that arose alongside this technology, we can look to edge computing. It's a paradigm that arose alongside the Internet of Things (or IoT). The idea is you process your workloads at the point of data collection, as opposed to sending a huge amount of data over the wire.

So here is just another example of a set of practices that evolved with technology. You want another one: Inbox Zero, it evolved alongside email. Personally, I think, you know, we should just replace email with something else, but that’s just me.

Let's talk about how DevOps became a thing. It wouldn't have evolved unless utility computing or cloud computing became a thing.

Related reading: 10 Things to Consider When Implementing DevOps at Your Organization

The Origin of DevOps (and more!)

So how did we get there? The answer is, of course, money. No, really. Utility or cloud computing was just a business decision. It's a natural evolution of infrastructure, like electricity. It [electricity] used to be something novel and innovative that only mad scientists could harness into a commodity, but now, electricity is just a monthly bill.

It's boring and ubiquitous and everywhere. The same thing happened to compute.

Once we had ubiquitous electricity, computing went from something novel and innovative that only mad scientists had access to, to a commodity. And now, people get a monthly bill for the cloud resources that they use.

Cloud computing is not a new idea by any stretch. You know, McCarthy and Park Hill talked about the idea in speeches at MIT or in the books that they published back in the 60s. Even though the concept isn't new, the computing industry needed a couple of things to happen before either cloud computing became fully realized or the practices that we now refer to as DevOps could exist. Those things are diffusion and commoditization.

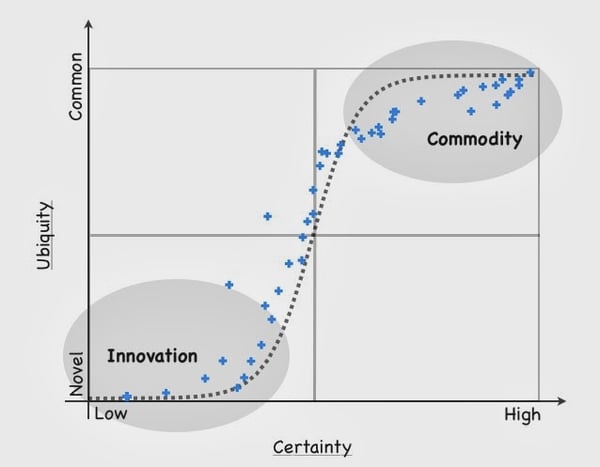

Let's see. So, if you remember science class, you'll remember molecular diffusion is when particles spread out and achieve equilibrium where they're distributed in a uniform manner. The same is true of technology. As time goes on, more consumers adopt the component, whatever that component is.

Inventions evolve toward commodities naturally, especially if they are viable.

During this evolution, that's when sets of practices co-evolve along with them. So the way that you work with the prototype of some component is not going to be the same set of practices that you have when that component is a utility or a commodity. The graph here that I'm showing has data from the radio, television, and telephone.

Bits or Pieces? By Simon Wardley

You can see that there's this sort of S-curve relationship between the availability or the ubiquity of a component and how well-defined it is. We call that process commoditization. That is the tendency for technology to move through these distinct phases as it goes towards utility.

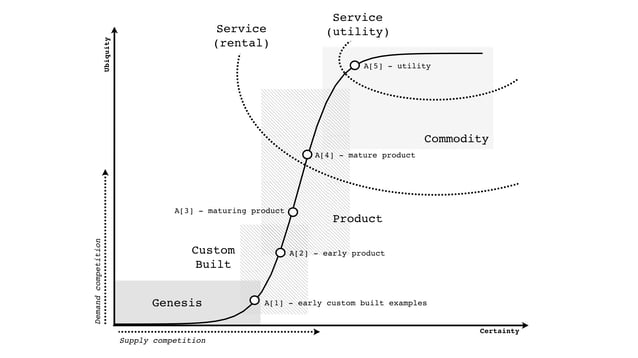

So each stage comprises one or set of practices, and as things go from the genesis of an idea (that includes proof of concept and experiments and prototypes), it moves on to custom-built solutions. It moves on from there to mass production shelf purchases and rentals, and then it moves on from there to commodify things, becoming commonplace, just another cost of doing business.

Now the amount of time that something might take to leap between these areas could be months, or it could be years. It all depends on the component in question. Let's look at electricity as an example. It started when there was a great boom in the 1800s in electromagnetism research.

In 1821, Faraday finally managed to invent an electric motor. About 10 years later, we had Hippolyte Pixii build an AC generator. It came with a hand crank and everything! Then in the 1870s, you had the Belgian inventor, Z’enobe Theophile Gramme, produce the DC Dynamo, which was the first generator to produce power on a commercial scale for industry.

And then in the 1880s, that's when you had the War of Currents. This was led by industry titans like Westinghouse, Tesla, and Edison. So in a period of 60 years, electricity evolved from something that was relatively misunderstood and not widely known to something that started to appear in absolutely everyone's homes and businesses.

And the ways that we approached electricity changed as it evolved. The same thing is true for computing. Let's take a look at what happened there.

1941 was when we had the first digital computer. It was designed by a German inventor named Konrad Zuse. Ultimately, Germany didn't deem it to be very important at the time [due to World War II]. And so it didn't get much funding, and it was destroyed by Allied bombardment in 1943. So, you can't see it in a museum, but you can probably see replicas.

1951 was when we got a custom-built computer, this was the Lion's Electronic Office One or Leo One for short. What's interesting is that this was built by a catering food manufacturing and hotel conglomerate.

They made this thing [the Leo One] for a competitive advantage, not because they were an IT company, but it wasn't long before an IT company got involved. So IBM debuted the IBM 650 in 1954, which was the world's first mass-produced computer. About 2000 of these were made in eight years. And now, you could get one of these monsters for just a couple thousand dollars a month!

This was during the sixties when we had the grandfather of DevOps. This was our first set of practices related to computer projects: batch processing! Programs were entered manually into control panels by machine operators. That was their job–to push the buttons.

To support such an operation, you'd have card punches, paper, and tape writers that were used by programmers to write their programs offline, like in a note. When typing or they're punching or once all of that was complete, they would submit these programs to be run by the operations team, like in a file folder or a box. Then the operations team scheduled them to be run.

When the program run was completed, the output, which was generally printed paper copy was returned to the programmer for inspection and analysis. The complete process might take days, during which time the programmer wouldn't ever see or touch the computer. It wasn't long past that when the next methodology arose. It was called time-sharing, not related to vacationing of course. (Laughs)

This developed out of the realization that any single user would make inefficient use of a computer just because of how they work with it. But a large group of users together would be relatively efficient. This is because an individual user has bursts of information followed by long pauses, but a group of people working at the same time would mean that the pauses of one user could be filled by the activity of the others.

So given optimal group size, the overall process would be very efficient.

The practices that we had at the time evolved along with the product. In the late 70s, computers became inexpensive and small enough that diffusion to everybody would be inevitable. Microcomputers had color graphics and it wouldn't be long before we had my personal favorite, the Commodore 64.

Which debuted, of course, the year that I was born [1982]. I had one and it had a 1200 Bod modem. That's right! And it was this computer that I used to learn how to program BASIC. Fast forward a couple of decades, we get to utility compute [becoming] widely available around 2006. Shortly thereafter they had, I think, went into production in 2008 [and that] is when they exited beta.

Related reading: Hosting Your Own Website vs. Using a Hosting Platform: Pros and Cons

The Ubiquity of Computing

We saw computing and electricity move through those distinct phases, and the ways that people worked with them changed as they evolved. Similar to what you can see from that process, we've got a component which we label A, in this graph. It diffuses, meaning it gains adoption, and it commoditizes, meaning that it evolves towards utility or ubiquitous use.

Bits or Pieces? By Simon Wardley

Sometimes there are external factors that keep things from evolving. For example, if you're a company that had great success with a component as a commodity, or excuse me, with a component as a product, you are probably not going to be the one who is going to achieve utility or commodity in it because you've developed inertia.

Usually, those people are prone to be outdone by another actor. And so the sets of practices that fit in nicely with a previous phase, like the product phase, are probably not going to be applicable at all to the next phase down. So when something becomes a utility, most companies avoid crossing that line.

They avoid jumping that chasm. They tend to do what everybody else does, but that's a good thing, believe it or not. When a business achieves success, when they get a tactical advantage through a component that they've developed, more pressure is placed on the entire industry towards commoditizing that component. When something becomes ubiquitous, we can then use it to build other things on top of it.

We call those higher order systems, and those higher order systems lead to new sets of practices surrounding those systems where you can build other higher-order systems on top of that. So it's like, we've got electricity. Great. We can build computers. So we built computers. Well, what can we do now?

Computers are now ubiquitous. Well, what can we do with that? We can make a distributed queuing service. Well, what could we do with that? We can tabulate American Idol votes from callers at neck-breaking speed. So it is this process of componentization that was described by Herbert Simon.

He said that when you have repeatable understood and standardized components, they can be used by a system to evolve. One of the best examples I can think of is in 1800 when Henry Maudslay introduced a lathe that would cut screws and screwthreads to a specific size and width. This led to an explosion of machines built using standardized screws and nuts.

This process has been repeated over and over and over again for thousands of years. The Parthenon Battery was something that we knew in antiquity that could store electrical current, but it took a couple of thousand years for us to get past those hurdles for it to be commonplace.

But once electricity was standardized, we had a boom of things that were never even considered before radio, Hollywood, or computing. So once computing got to a commodity phase, that's when we started to get conferences dedicated to, well, how can we reorganize our business processes using these new utility paradigms?

So that's when we had DevOps Days, which came out in 2009, shortly after the presentation that two Flickr employees gave at an O'Reilly conference: Dev and Ops Cooperation. So the term DevOps has matured since the release of these, well, not the release, the inception, I guess, of these conferences.

You should note that DevOps Days was founded by a consultant and project manager and Agile practitioner. So this person was not an engineer, didn’t have anything to do with cloud or cloud infrastructure, but saw the value of continual improvement in monitoring a system.

Let's talk about some of the best practices that are housed within DevOps.

Related reading: The Evolution of Agile in a Fully Remote World

DevOps Best Practices

Now that we have talked about how we got to DevOps, some of you may remember the old way of doing things. When computing was a product, practices arose around it being a product. One of those was high MTBF. That means the mean time between failures. We focused on scaling up capacity planning, disaster recovery, and release planning.

These were the kinds of things that we would do back in the 90s and early 2000s. DevOps practices, once compute was a utility, instead of scaling up, meaning adding more RAM to our data centers, computers scaled out, meaning “let's just launch more computers.”

Instead of capacity planning, we're going to convert to distributed systems. This means we're going to have things [coding elements] all over the place and [those elements] will talk to each other as they are loosely coupled. We're not going to do disaster recovery, not in the way that we used to. Instead, we're going to design for failure.

In fact, Netflix has a program called Chaos Monkey that will randomly turn things off in your workload with the idea being that you should architect things to recover very quickly from those kinds of failures. And instead of release planning, we do continuous delivery. We push to production 30 times a day.

That is the opposite of release planning, where you would have weeks of development that would go into a single launch day. Now, the product world is legacy.

Let's talk about some best practices for infrastructure though.

First, [think of servers] as cattle, not pets. Servers are disposable. That's what it means. Servers should be configured at launch by a script or a machine image. They should store little to no data directly, so if they run into a problem, you can replace it.

Second, hands off. Use managed services wherever possible. Use a managed database instead of running a VM with a database that you install on it. Continuous everything. Deploy to production as often as you want, so long as you have the ability to verify that your artifacts from each step of the DevOps are verified and good to go.

If you write code, you should have a test that verifies that that code is working correctly. If you have an entire application, you should have at least a test that verifies that the application is behaving correctly or, you know, is responding to traffic. For example, script infrastructure is code that is repeatable and standardized.

You want to make sure everything you do can be done again and at a high frequency, and as often as people want. To adopt CI (continuous integration) and CD (continuous delivery), run your automated tests, run them frequently, and launch a process that will upgrade your software dependencies every night.

Anything that is considered ‘yak shaving’ should be automated away.

Some benefits of doing these things:

Minimizing the time to deploy a change is undeniably good. Customers are always happy when we can push to commit to finding ways to production in seconds or minutes, as opposed to hours or days. Infrastructure that self-heals is a no-brainer.

I don't want to wear a pager or get woken up in the middle of the night to fix a broken hard disc. It should just go on and replace itself. Developers that are forced to use this standardized code formatting know their code is in the proper format and their test passed before they even submit a merge request. In projects where we leverage Docker, developers can use the same Docker images in their local development environment that would be run in production.

The End of DevOps

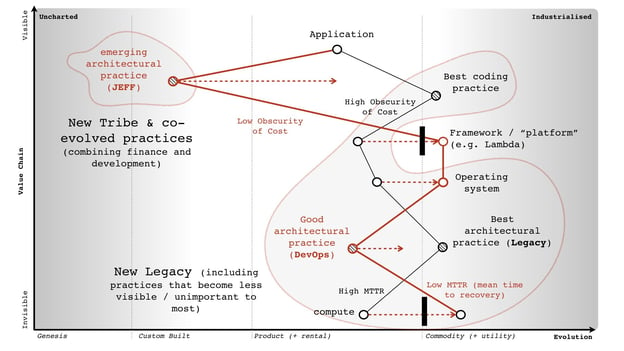

Now let's talk about why DevOps is not here to stay. The answer to that is ‘serverless’, AKA JEFF. What does it mean? To function as a service! This is another component that has been built on top of utility computing. Some folks are a little hung up on the name. Yes, of course, there are still servers underneath. They are just lower on the value chain. They are assumed to be a commodity that will be there, and nobody really cares about serverless.

You can see in this map that (this is a combined value chain map and component evolution map) compute jumped that barrier from product to commodity. You can see that DevOps practices arose and continued to evolve.

Bits or Pieces? By Simon Wardley

Bits or Pieces? By Simon Wardley

Operating systems became less of a business advantage and more of an implementation detail. The platform component jumped the barrier and became a utility. So the advent of serverless reveals a property of our source code and our applications that nobody really thinks about, which is its worth.

This is what is going to replace DevOps. It’s tentatively called FinOps, but serverless allows us to meter and analyze the financial worth of the code we run based on factors like how much RAM does it take up? How much I/O (input/output) does it need? Businesses have debuted (and more will continue to debut) that specialize in just one function that accepts some input, returns some output, and does it well.

Something should do one thing and it should do it extremely well. That's the same idea behind microservices, right? Serverless, you can use AWS Lambda and AWS API gateway, just as an example. Plenty of other services are out there that do this, but you can launch a fully-realized rest API without logging into or configuring a single server.

You don't have to worry about it because it's lower on the value chain map. You just pay for the actual requests that you service, not for any idle time. That's enormous. And this has been the case for six years. You might remember earlier when I mentioned edge computing. Well, serverless is extremely important to edge computing, because, for example, AWS Greengrass, which is a masterful play, allows you to run serverless functions directly on your distributed IoT devices.

Conclusion

Very cool stuff. So, in conclusion. I think I can sum this up thusly: DevOps is a culture.

It is the term that we use to describe what happens when you combine people with agile, like processes, technology, automation, and constant improvement informed by continuous feedback.

That is what I believe DevOps is, and this is what I would strive for all of us to adhere to, constant improvement informed by continuous feedback.

That sounds pretty good. Feel free to offer up recommendations or challenge each other. For us to better adhere to those kinds of paradigms, the DevOps Loop itself reminds me very much of The Red Queen hypothesis. It was a metaphor in 1973 that stated that an organism (or a business for that matter) must constantly adapt and evolve, not to gain an advantage, but simply to survive because every other organism is doing this.

The only way to futureproof your workload, your work, or your company, is to constantly evaluate your situation and adapt. So we have this new, amazing product called utility computing. Well, how can we evaluate that situation and what can we adapt to become? Here's another one that I will leave you with: in the 1871 Lewis Carol novel, Through the Looking Glass, the Red Queen explains to Alice the nature of Looking Glass Land.

She says “Now, you see, it takes all the running you can do just to keep in the same place.” So all other companies are at adopting these diffused components, these things that are now widely available, which means that we all need to do it, too. So that's all DevOps is: it is a set of practices to help us continually improve.

Is it related to cloud computing? Sure. Is it synonymous? Not by any stretch and it's certainly going to go away. I would guess in the next 10 years.

Hawk, a dedicated Princess Bride fan, is Mindgrub’s Director of Cloud Engineering and a self-proclaimed ‘geriatric millennial’. He is an expert in his field and has a passion for all things cloud engineering. Keep an eye out for more informational segments with Mindgrub on our Twitter, Linkedin, and Facebook!

If you want to learn more about our web and engineering services, contact our team.